The Dark Side of A.I. : Understanding the Dangers of Deepfake Images

Reality Rewritten: The Menace of Deepfake Images

Hello, fellow reader!

I am Abhishek Verma back with another insightful piece on a topic that has been looming over the realm of artificial intelligence – the disturbing rise of deepfake images and their potential impact on females.

While A.I. has opened new avenues for innovation and creativity, it has also been used nefariously to create manipulated images and videos that are almost indistinguishable from reality. In this blog, we will explore the dangers of deep fake images, particularly how they can be misused and the specific concerns they pose for women in society.

Let's discuss the history and beginnings of Deepfake Technology.

What is Deepfake?

Deepfakes (a portmanteau of "deep learning" and "fake"[1]) are synthetic media[2] that have been digitally manipulated to replace one person's likeness convincingly with that of another. Deepfakes are the manipulation of facial appearance through deep generative methods.

- Wikipedia

Confused? It's just fake media like images, audio and videos created using deep learning technology.

Deepfake technology leverages powerful A.I. algorithms to superimpose one person's likeness onto another's, creating realistic yet fabricated videos and images.

The Rise of Deepfake Technology

Just like other technologies, Deepfake as a technology had humble beginnings. It was mainly used for entertainment purposes like swapping faces in movies or viral videos. Even social media apps like Snapchat and Instagram use this technology for filters on photos and videos which are loved by their users.

However, just like other technologies, Deepfake technology came into the wrong hands and few started using it to spread misinformation, identity theft and worst of all revenge porn.

"Deepfakes" term was coined by a Reddit user

u/deepfakeswho created ther/deepfakesReddit community where the user started posting fake images and video porn of celebrities like Gal Gadot, Taylor Swift, Scarlett Johansson and others made using face imposition on existing videos.

Reddit banned the r/deepfakes community after it made headlines and made an announcement adding a new rule for “non-consensual intimate media”.

Misuse and Its Impact on Females

One of the most troubling aspects of deepfake technology is its potential to harm women. Deepfake images have been used for non-consensual pornography, cyberbullying, and defamatory purposes, leading to severe emotional and psychological distress for victims. Female public figures and even ordinary women are at risk of having their identities exploited and tarnished, causing reputational damage and undermining their sense of safety.

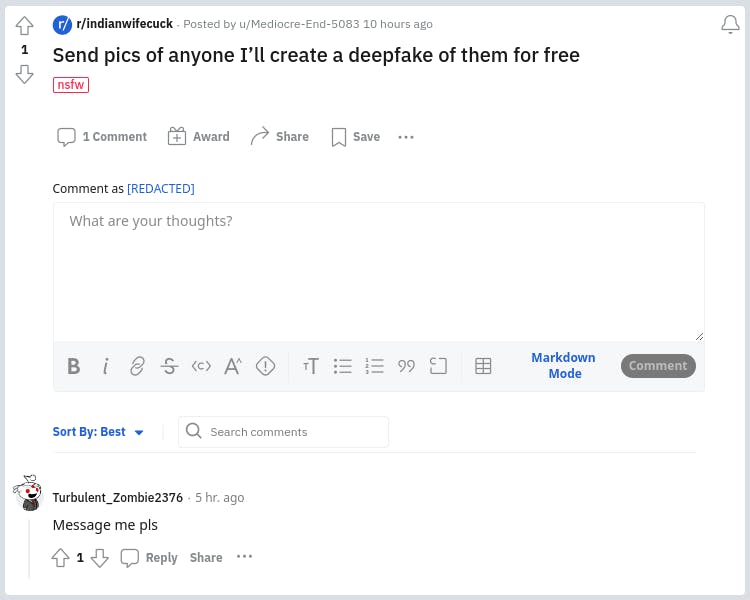

During my research on this topic, I stumbled upon alarming evidence of the dark underbelly of deepfake technology. Shockingly, there are hundreds of communities on platforms like Reddit, Discord, and Matrix solely dedicated to creating non-consensual pornography of female celebrities. Even more distressing is the fact that some individuals are shamelessly requesting deepfakes of ordinary women.

This disturbing reality makes it clear that in this new era of Artificial Intelligence and Deepfakes, no one, whether a female celebrity or an ordinary woman, is truly safe from exploitation.

Telegram: The Hub of Deepfake Bots

The convergence of Telegram's infamous piracy issues with the surge of deepfake bots is a pressing concern. These bots are now offering deep fake porn services, and with just a small amount of money, anyone can easily access them. This accessibility poses a significant risk of harmful content, especially targeted at individuals, particularly women, without their consent.

What we can do to stop it?

If you find any Deepfake bots on Telegram, you need to report the output images of these bots for "Pornography" from the app and Telegram will ban these bots. I reported around 15 bots and around 9 of them are banned from the platform.

Once a bot is banned, you'll see a message like this:

You can also use the Telegram support page to report such bots.

Unveiling the Experiment

As someone who has delved into the subject of deepfakes and their potential dangers, I couldn't resist the urge to gain practical insights into this alarming technology. Hence, I embarked on an experiment to witness firsthand how it operates.

Using readily available deepfake tools, I decided to test their capabilities on AI-generated images of female models. The results of this experiment were both astounding and unsettling. The A.I. algorithms seamlessly manipulated these images, crafting fabricated content that was eerily realistic.

Disclaimer: Please note that the experiment conducted in this research aims to analyze deepfake tools and bots. It is crucial to emphasize that no images of any real person were utilized during this study. All images used are solely AI-generated, ensuring that no individual's privacy or consent were compromised in any way. The intention behind this experiment is to explore the capabilities of deepfake technology responsibly and without causing harm to anyone involved.

Here's what I did in this experiment:

Collected 50+ Deepfake tools and Telegram Bots

Created an AI-generated image of a fictional female model

Tested the image on each tool and bot

The results were incredibly realistic, even for someone familiar with Deepfakes

Imagine the impact on individuals who are unaware of this technology

Results of the experiment

Here's one of the output images (censored for this article for obvious reasons) showing the capabilities of this technology:

As evident from the comparison, the above Deepfake image is virtually indistinguishable from the original one. This technology is highly perilous, as it can produce deceptive content with alarming accuracy. The dangers lie in its potential misuse to manipulate and deceive, putting individuals at risk of privacy violations, reputational harm, and emotional distress. The realistic nature of Deepfakes underscores the urgent need for increased awareness, responsible AI usage, and robust measures to mitigate its negative impact on society.

Note: I am not sharing the names or any other details about the tools and bots used in this experiment as they can be used for malicious purposes. However, if you want to know about the tools and access the uncensored results for experiment and your reason is convincing, then I can send the results along with the tools used. Please submit your request here.

What we can do?

In this situation, the responsibility to combat deepfakes largely lies with the government and big tech companies. As individuals, we may not have direct control over these technologies, but we can advocate for change. Governments must comprehend the risks associated with deepfakes and take proactive measures by strengthening policies and enacting robust laws to address their misuse.

At the same time, big tech companies must play a pivotal role by investing in technologies that can detect and prevent the spread of deepfake content. Collaboration between tech experts, researchers, and policymakers is essential to develop effective solutions that protect individuals from the harmful effects of deepfakes.

As citizens, we can raise awareness about the dangers of deepfakes and encourage discussions on this issue. By being informed and vocal about the impact of these technologies, we can collectively push for the necessary changes to ensure a safer digital environment for all.

What a victim of deepfakes can do?

If someone created deep fakes of you or someone you know then here's what you need to do:

If the person threatens or blackmails you with deep fakes:

Be strong and don't break

File a police complaint against him/her based on your local or country's laws they will act on the complaint. As of now, India lacks specific laws or regulations targeted at banning or controlling the use of deepfake technology. However, certain provisions within existing laws, such as Sections 67 and 67A of the Information Technology Act (2000), may be applicable to address certain aspects of deepfakes, such as defamation and the dissemination of explicit material. (Not a Legal Advice)

Consult a Lawyer: Next, you can consult with a lawyer who specializes in internet privacy and defamation issues. They can guide you on the legal options available in your jurisdiction.

If the person uploaded deepfakes on a platform:

Ask the platform to remove the content: Yes, you can ask a platform to remove the deepfakes content. Here's a list of popular platforms and report pages:

What's happening to control Deepfakes right now?

The government and big tech companies are realizing the impact and risks of deepfakes and AI. Governments are bringing new policies and strict laws to stop the use of deepfakes for malicious purposes.

On the other hand, companies like Adobe, Microsoft, Intel, Sony, Truepic and BBC collaborated to solve the problems of fake and manipulated content on the Internet with the help of the Coalition for Content Provenance and Authenticity (C2PA). It's a set of guidelines for both software and hardware providers that would attach metadata to every media file like images, audio and videos. It will then use cryptography to digitally sign files to make every file tamper aware. So, even when someone changes a single pixel of the original image, it can be easily detected.

In my opinion, it should be integrated into Google Lens, Gallery apps and File Managers which will help us to easily identify files that are manipulated and understand the difference.

Conclusion

The rise of deep fake images poses a serious threat to individuals and society as a whole. As we navigate the technological landscape, it is crucial to be aware of the dangers associated with A.I. misuse and take proactive measures to combat its negative consequences. Together, we can create a safer digital world, where women can thrive without the fear of falling victim to deepfake manipulation. Let us harness the power of technology responsibly and protect the integrity and dignity of every individual, regardless of gender.

Reference

Here's a list of articles and documents I referred while researching for this blog post:

Reddit bans 'deepfakes' face-swap porn community - TheGuardian

What is Deep Learning? - Western Governers University

Deepfake bots on Telegram make the work of creating fake nudes dangerously easy - TheVerge